May 2024

What is Data Quality?

Data quality refers to how well data works for its intended purpose, which involves evaluating various attributes like consistency, accuracy, completeness, and timeliness. This concept is vital in the scope of data management and analytics, as it determines the reliability and effectiveness of data in serving its intended purpose.

In the simplest terms, data quality assesses how well data serves the needs of its users. For example, high-quality data in a business context would be data that is accurate, complete, and timely, thereby enabling informed decision-making and efficient business processes. The dimensions of data quality are numerous, and they include traditional measures such as accuracy, completeness, consistency, validity, uniqueness, and integrity. Beyond these, additional dimensions like relevance, accessibility, interpretability, and coherence are also considered crucial in evaluating data quality.

Moreover, data quality is not just a static attribute; it is an ongoing assessment that evolves with the data's usage and context. Different methodologies and tools are developed to measure and improve data quality, adapting to the specific needs of the organization and the nature of the data itself. This adaptability is essential, as the criteria for what constitutes 'quality' data can vary significantly across different industries, applications, and user requirements.

Why is Data Quality Important?

Data quality is a cornerstone of effective decision-making and organizational success. High-quality data is essential because it enables organizations to manage growing data volumes efficiently and achieve strategic business intelligence goals. This is particularly crucial in a data-driven world, where the accuracy, completeness, and timeliness of information can significantly impact the effectiveness of decision-making processes.

Impact on Decision-Making and Organizational Success:

Data quality plays a crucial role in managerial decision-making and organizational performance. Studies have shown that data quality directly impacts the effectiveness of business intelligence tools, thereby influencing organizational success. Additionally, the decision-making culture of a company can moderate the relationship between information quality and its use, further affecting the utilization of quality information for organizational success.

Data warehouse success, for instance, is significantly influenced by factors like system quality and information quality, which in turn affect individual and organizational decision-making impact. A robust data quality assessment minimizes the risk of decisions based on poor data, supporting enhanced organizational performance.

Examples from Industries Highlighting the Importance of High-Quality Data

In the healthcare sector, high-quality data is critical for accurate diagnoses, effective treatment plans, and successful patient outcomes. This data encompasses various aspects, including patient demographics, medical history, lab results, imaging scans, and medication information. Inconsistencies, inaccuracies, or missing data points within these elements can lead to misdiagnoses, inappropriate treatment decisions, and potentially severe consequences for patient well-being. For example, incomplete or inaccurate medication allergies listed in a patient's record could result in the administration of a harmful drug, jeopardizing their health and safety.

The automotive and supply chain industries also heavily rely on high-quality data for various aspects, including product development, design, inventory management, demand forecasting, and logistics. Precise and accurate data is essential for these industries to function efficiently and safely and avoid costly mistakes. Real-time data plays a crucial role in optimizing processes and ensuring timely deliveries. By prioritizing data quality and implementing robust data management practices, organizations across various sectors can ensure accurate insights, efficient operations, and achieve their strategic goals.

By prioritizing data quality and implementing robust data management practices, organizations across various sectors can ensure accurate insights, efficient operations, and achieve their strategic goals.

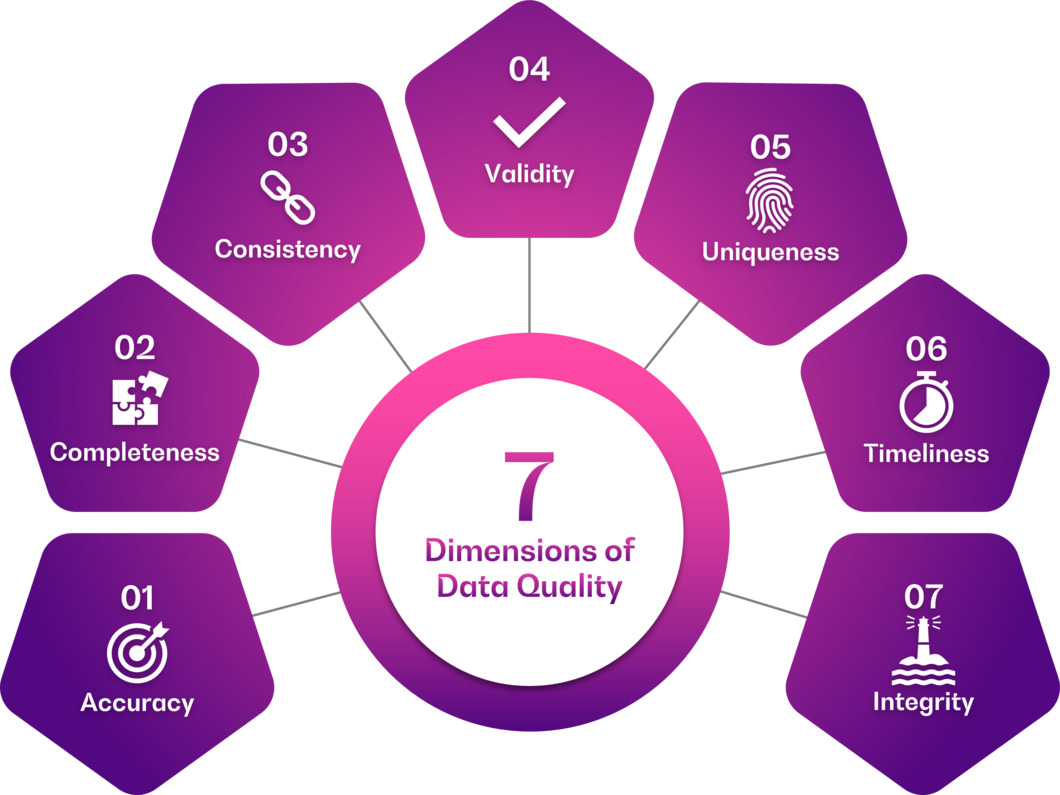

What are the Dimensions of Data Quality?

The concept of data quality encompasses a range of dimensions that are critical to assessing and improving the value of data in various contexts. Understanding these dimensions is essential for ensuring that data meets the necessary standards for its intended use.

Traditional Dimensions of Data Quality

The traditional dimensions of data quality, as identified in research, include:

- Accuracy refers to the extent to which data is correct, dependable, and free from error. For example, in a medical database, a patient's blood type must be recorded accurately, as any discrepancy can lead to dangerous situations during procedures like blood transfusions.

- Completeness ensures that all necessary data is present. In customer databases, for instance, every entry must have complete contact details and transaction records for effective relationship management.

- Consistency maintains data uniformity across different datasets and systems. For businesses with multiple branches, sales data must be reported in a consistent format to allow for accurate comparisons and analyses.

- Validity ensures that data conforms to specific formats and standards. A user registration system, for instance, must verify that the format of email addresses entered is valid to ensure successful communication.

- Uniqueness guarantees that no duplication of data entries in a dataset. The uniqueness of each entry in a voter registry is crucial to prevent duplicate records and potential voting fraud.

- Timeliness involves data being up-to-date and available when needed. In the context of stock trading, for example, the timeliness of market data is non-negotiable, as even a minor delay can result in significant financial implications for traders.

- Integrity preserves the accuracy and consistency of data over its lifecycle. The banking sector exemplifies this, where maintaining the integrity of transaction records is critical to user trust and regulatory compliance.

Additional Dimensions

As data environments become more complex, especially with the advent of big data and AI, additional dimensions of data quality have emerged:

- Relevance ensures that data is applicable and useful for the purposes for which it is intended. Consider a retail company that gathers consumer data to predict buying trends. The relevance of this data is paramount; only recent purchasing patterns should be analysed to reflect the current market accurately.

- Accessibility revolves around which data can be accessed and used by intended users. A tangible example is a cloud storage service, which must ensure that users can access their files from anywhere, at any time, and on any device without issue.

- Interpretability refers to the extent to which data is understandable and can be comprehensively interpreted. For instance, census data should come with clear metadata so researchers can easily understand and utilize the information provided.

- Coherence refers to logically connected and consistent data within a dataset. Economic reports provide a clear example, of where various indicators like inflation rates and GDP growth should logically correlate to present a coherent economic overview over a given period.

Data Quality in Research Contexts

In research contexts, data quality also emphasizes rigour, integrity, credibility, and validity. These aspects ensure that the data used in scientific studies can be trusted for accurate interpretations and meaningful conclusions.

Methodologies for Data Quality Assessment and Improvement

Data quality assessment and improvement encompass a range of best practices. These include preparation, deployment, maintenance, and data acquisition, with consideration of resources and capabilities.

In healthcare, for example, improving data quality involves attention to staffing patterns, making health data more accessible, developing a sole source of information, and employing supportive supervision visits. In the context of patient experience data, employing evidence-based approaches and maintaining an internal system for communicating patient and family experience information is vital.

Context-Based Data Quality Metrics

The application of context-based data quality metrics, especially in data warehouse systems, is another critical area. This involves the evaluation of data quality plans by at least two readers and reporting agreement measures to ensure consistency and accuracy. The ten-step process for quality data, including data specifications, data integrity fundamentals, duplication, accuracy, translatability, timeliness, and availability, is a systematic approach adopted by many organizations.

Data Quality Frameworks

Various frameworks are applicable in different environments for data quality improvement. The MAMD framework, for instance, is a comprehensive model addressing best practices in data management, data quality management, and data governance. This framework helps align and establish relationships between different data management disciplines, enhancing data quality levels in organizations.

What are the Challenges of Data Quality?

Top 3 Challenges in Maintaining Data Quality

- Security and Privacy Issues: Significant challenges in data quality maintenance include secure computations, secure data storage, end-point input validation, real-time security monitoring, scalable analytics, cryptographically enforced security, granular access control, granular audits, and data provenance.

- Data Inconsistencies and Errors: Key issues also encompass data consistency, data deduplication, data accuracy, data currency, and information completeness. These aspects are crucial for ensuring that data accurately reflects the real-world scenarios it is intended to represent.

- Complexity in Big Data and AI Environments: The rise of big data and artificial intelligence brings unique challenges, such as managing abundant and heterogeneous datasets, high volume, heterogeneity, and high speed of data generation and processing. These factors increase the complexity of ensuring data quality and necessitate advanced techniques for data management.

Emerging Challenges in the Era of Big Data and AI

While the ever-growing volume and velocity of data offer tremendous potential for insights and innovation, the era of big data and AI also introduces emerging challenges in data quality. These challenges, if left unaddressed, can have significant downstream impacts, as they directly affect the reliability and trustworthiness of the insights these technologies generate.

Here are some of the specific challenges in maintaining data quality in the big data and AI era:

- Rapid Data Throughput: Managing large volumes of data at high speeds is a significant challenge, necessitating accurate data quality assessment methods.

- Heterogeneous Access and Integration: Ensuring consistency and integration across diverse data sources and formats becomes increasingly complex.

- Quality Deterioration Over Time: Maintaining data quality over time is challenging, especially with the evolving nature of data and its uses.

Is your business suffering from bad data quality? Contact us and learn more about how we can help your business!

Differences and Comparisons Regarding Data Quality

Data Quality vs. Data Integrity

Data Quality refers to the overall utility of a data set as a function of its ability to be easily processed and used by end-users. Key dimensions of data quality include accuracy, completeness, reliability, and relevance. Data quality is a multifaceted concept that varies with the requirements and expectations of its users. It is not just about the accuracy of the data but also encompasses its timeliness, consistency, validity, and uniqueness.

Data Integrity, on the other hand, is more focused on the accuracy and consistency of data over its lifecycle. It is a critical aspect of the design, implementation, and usage of any system which stores, processes, or retrieves data. The goal of data integrity is to ensure that data remains unaltered and consistent during storage, transfer, and retrieval. This includes maintaining data consistency, accuracy, and reliability from the point of creation to the point of use.

Data Quality vs. Data Cleansing

Data Quality is an initiative-taking measure that focuses on ensuring the correctness, reliability, and validity of the data at the point of entry into the system. It involves setting up standards and processes to prevent data errors and inconsistencies.

Data Cleansing (or data cleaning) is a reactive process that involves identifying and correcting (or removing) errors and inconsistencies in data to improve its quality. This process is typically conducted on data that has already been stored and is a key part of the maintenance of high-quality data. Data cleansing is essential in situations where data quality has been compromised and needs restoration to meet the required standards.

Data Quality vs. Data Governance

Data Quality is an aspect of the overall management of data that ensures the data is fit for its intended use in operations, decision-making, and planning. It involves the processes and technologies used to ensure data accuracy, completeness, reliability, and relevance.

Data Governance, meanwhile, refers to the overall management of the availability, usability, integrity, and security of the data employed in an organization. This includes the establishment of policies, procedures, and responsibilities that define how data is to be used, managed, and protected. Data governance encompasses a broader scope than data quality, including aspects like data policymaking, compliance, and data stewardship.

How to Improve Your Data Quality

Improving data quality is crucial for organizations to ensure the reliability and accuracy of their data-driven decisions. Various metrics can be employed to measure and enhance data quality across different industries.

- Setting Expectations and Defining Indicators: It is crucial to establish clear expectations for data quality and define specific indicators that will be used to measure it. This approach ensures that everyone involved understands what is required and how success will be gauged.

- Task-Based Data Quality Method (TBDQ): The TBDQ method is particularly effective in selecting optimal activities for data quality improvement in terms of cost and improvement, making it a pragmatic choice for organizations.

- Increase Quality Cost Percentage: Allocating a higher percentage of the budget to quality cost can significantly improve data quality, as it allows for more resources to be directed towards ensuring data accuracy and integrity.

- Improve3C Framework: This framework is effective in achieving relative currency order among records and considers the temporal impact on data, thus enhancing data quality.

- Downstream Customer Quality Metrics: In software quality improvement, using downstream customer quality metrics (CQM), upstream implementation quality index (IQI), and prioritization tools can focus development resources on the riskiest files.

- Standardized Spreadsheets: Introducing standardized spreadsheets to teams in quality improvement collaboratives can be a practical tool for collecting standardized data, thus enhancing the overall data quality.

These methods collectively offer a multifaceted approach to improving data quality, catering to various aspects like cost management, process optimization, and stakeholder engagement. Implementing these strategies can lead to significant improvements in the reliability and usefulness of data across various domains.

BearingPoint’s Data Quality Navigator

BearingPoint's Data Quality Navigator (DQN) is a pivotal tool for organizations aiming to enhance their data quality. DQN excels in identifying, analysing, and correcting data discrepancies, ensuring that businesses operate on clean, accurate, and up-to-date information. DQN provides a suite of functionalities tailored to tackle data-related challenges effectively. Through its real-time monitoring and automated correction processes, the DQN allows companies to enforce data quality rules that align with their specific business objectives, ensuring that the integrity and consistency of data are maintained across all systems.

Its success is underscored by numerous case studies, particularly one involving a retail chain that significantly reduced data redundancy, leading to improved inventory management and customer satisfaction. The DQN integrates smoothly with existing IT infrastructure, making it a smart choice for businesses looking to improve data quality without overhauling their entire systems. By utilizing the DQN, organizations can address various aspects of data quality, from eliminating duplicates and correcting inaccuracies to updating obsolete data, which is crucial for maintaining a competitive edge in today's fast-paced market.

Conclusion

Data quality is an essential aspect of modern business practices, with its significance transcending operational and strategic decisions. As we have discussed, its impact on organizational success is profound, influencing everything from analytics to long-term planning.

The future points towards an increasing reliance on data quality, especially with the rise of big data and AI. These technologies demand high-quality data, and their effectiveness is contingent upon it. Therefore, businesses must prioritize data quality, utilizing tools like BearingPoint’s Data Quality Navigator to stay competitive.

In closing, maintaining superior data quality is not a one-time effort but a continuous endeavour. It is crucial to adapt to the evolving digital landscape, ensuring data remains accurate, relevant, and dependable. Embrace the journey towards exceptional data quality—it is an investment that pays dividends in informed decision-making and sustainable success.

Want to know more?

Take the next step towards enhancing your data quality. Evaluate your data management strategies, adopt advanced tools, and prepare for the future by placing data quality at the heart of your operations. Contact us for more an individual consultation.